Businesses use customer satisfaction surveys to get a gauge on how their customers feel about them. They’re also used to identify pain points in a customer’s journey and help you get a marker on where to improve your product or service. A good experience will drive revenue—a bad one could haunt you for years.

Twenty-four percent of satisfied customers will frequent a business or company for more than two years after a good experience—but 39% will drop them for the same amount of time after a bad experience. And customer surveys are the best way to find out if you fall into the former or the latter.

A good survey is built on good questions, and that starts by implementing a few fundamental basics—focus, brevity, good grammar, and clarity.

What are the components of a “good” customer satisfaction survey question?

There are some tips and tricks to writing good questions and some pitfalls that you can easily avoid—if you’re aware of them.

Questions should be short and to the point.

Try to make your questions short but meaningful. The most common (and best) CSAT question is:

How did you like our product or service?

a) It was great (loved)

b) It was pretty good (liked)

c) It was okay (neutral)

d) It was not good (disliked)

e) It was awful (hated)

Simple and to-the-point. If you’re talking to a friend, this would be how you would phrase the question (if you want an honest answer) about a product. You probably wouldn’t ask your friend: “Considering their long history of philanthropy and social awareness regarding the environment, how did you find [company’s] product or service?” That’s too much to unpack.

Takeaway: Short and to the point gets you a more direct (and more honest) answer.

Ask about specific objectives and avoid double-barreled questions.

Start with a specific objective, like customer service or product usability. Try not to ask about both in one sentence. For instance, avoid questions with two objectives like: “Was customer service helpful, and would you purchase from us again?” That’s known as a double-barreled question, and it happens more often than you’d think.

Even breaking down one concept into multiple objectives will give you a clearer answer. “Was your problem resolved?” before “Was customer service helpful in resolving your problem?” will give you insight into whether they solved their problem on their own.

Takeaway: Be on the lookout for the word “and.” That’s a sure sign that you’re asking more than one question.

Ask customers to speak their mind through open-ended questions.

Always ask for the customer’s opinion. What could we do to improve? A great way to understand the customer better is to let them speak—and the way to do that with a CSAT survey is to ask for their opinion, in their words. Open-ended questions will often start with:

“Tell us in your own words…”

“In just a few short sentences, tell us…”

“What do you think…”

The good thing about these questions is that they work well with engaged customers who are willing to let you know how they feel. Try not to overuse them, or respondents may suffer from survey fatigue and just give up answering the rest.

Takeaway: It’s a best practice to place open-ended questions at the end of the survey after your customer has already answered the primary questions. If you lead with “tell us in your own words” as the first question, most respondents will see it as a sign that some additional work has to be put into the survey, which may lead to a drop-off in responses.

What types of customer satisfaction survey questions should I avoid?

Writing good survey questions is just as important as avoiding some of the pitfalls that all survey creators face. These obstacles should be addressed before the final survey goes out. Your respondent will not tolerate a messy, poorly constructed survey and may simply give up. Unfortunately, some of these mistakes are fairly hard to catch.

Leave out: Questions that assume to know the customer.

The easiest bad type of question to recognize—that still manages to slip into some surveys—is the leading or assumptive question. “How do you like your jalapeños—fresh or deep-fried?” You’re already assuming the customer eats jalapeños, which they may not. When a customer sees a question like that, they may avoid the rest of the survey because it seems like you don’t understand them.

Instead, consider wording the question as simply: “Do you like jalapeños?” They may be more inclined to answer this question and continue the survey.

Takeaway: Avoid stopping the customer from continuing the survey in order to get reliable, useful data.

Leave out: Leading questions.

Being proud of a company’s achievements is certainly something to hang your hat on—but try not to do it in a customer survey question. An example we’ve seen of leading the customer to a conclusion is asking a question like: “We recently spent a million dollars on this great website for customers like you. Do you like it?” That kind of leading question forces the customer to respond positively by guilting them. Or, they might ditch the rest of the survey, which you don’t want.

A better practice is to subtract from the question—not add. The question could be more effective by simply asking: “Did you like our website?”

Takeaway: More often than not you can leave out qualifiers.

Check for poor grammatical usage.

A grammatically correct question will prove much more effective than a poorly written and hard-to-understand question. If a respondent sees a sloppy or poorly worded sentence, their first assumption is that you didn’t care enough to ask them something important in an intelligent and professional way.

With the prevalence of scams, poor grammar can scream “fraud” to some of your customers. If your questions are poorly worded, you also run the risk of being ignored. Always have your questions proofread, or use a reliable survey company to compose the questions for you.

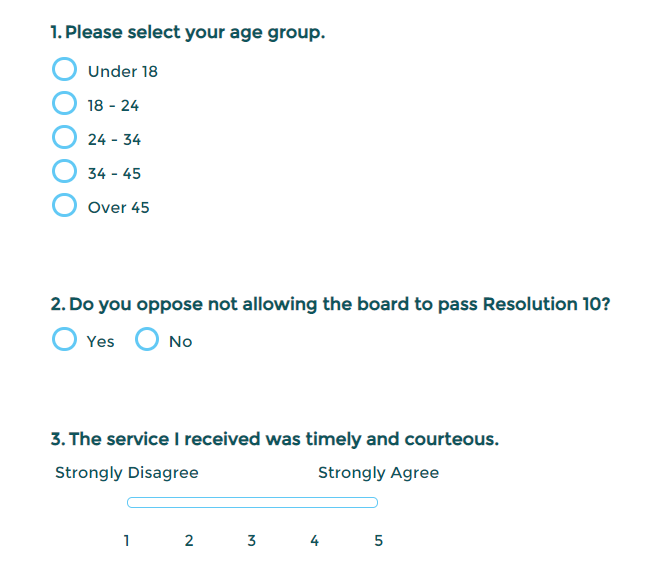

Look for sneaky grammatical choices like using the double-negative: “Was our customer service not unhelpful?” or “Do you agree that your customer service experience was not uncommon?” This kind of question only adds confusion to your survey.

Question 2 is an example from SoGo Survey that shows the innocuous double-negative in action.

Takeaway: Mistakes happen, but can easily be caught by making sure you get an extra pair of eyes on your survey before it goes out.

Limit similar-sounding questions.

No one likes to take long surveys. We’re reminded that time is a precious commodity every time we use a microwave. When it comes to surveys that take longer than 10 minutes, 60% of people don’t like them, and 87% of people say the same about surveys longer than 20 minutes. This is why you should try to limit the number of similar-sounding questions. If you ask: “How did you like our product?” and then five questions later you rephrase the question and ask how strongly they agree with the statement: “I liked the product,” you’re simply going to annoy the respondent. Review your question list to weed out any questions that have already been answered.

There is, however, an instance where you may want to repeat a question in order to defend against acquiescence bias. Acquiescence bias happens when a respondent answers a survey with the same repeated positive or negative answer. This isn’t necessarily because the respondent really feels this way, but because they simply want to get through the survey as quickly as possible. If you suspect that might happen with your survey, then ask two questions with similar response options. For example:

Do you love to travel?

Which action best describes you?

|

If the respondent answers A or B to both questions, they may not be answering the survey truthfully—it’s unlikely that you like to travel but prefer to stay in with a good book. This usually happens when a respondent doesn’t want to take the time to answer the questions—survey fatigue in action—or because the respondent is just agreeing with every answer to please the survey taker or company (known as the “agreeability” theory).

Takeaway: If you see this is repeatedly happening with a number of your survey responses, consider taking a good look at how the original question is phrased. Try avoiding yes/no or agree/disagree questions. Or you could use it as the litmus test for discarding a respondent’s answers.

Summing up

Don’t waste your customer’s time—or your resources—by formulating poor customer satisfaction surveys with ineffective questions. Time is the one commodity that your company cannot give away. Customer satisfaction surveys could mean the difference between staying competitive and losing touch with your customers.

In the end, the best surveys will allow you to know more about your customer, serve them more efficiently, and increase revenue, loyalty, and brand recognition by making them happy.

Up next: Elevate your surveys with this guide to CSAT surveys, and make sure you’re tracking your way to customer delight with these 10 customer satisfaction metrics.